Introduction

Motivated forgetting is a theorised psychological behaviour in which people may forget unwanted memories, either consciously or unconsciously. It is an example of a defence mechanism, since these are unconscious or conscious coping techniques used to reduce anxiety arising from unacceptable or potentially harmful impulses thus it can be a defence mechanism in some ways. Defence mechanisms are not to be confused with conscious coping strategies.

Thought suppression is a method in which people protect themselves by blocking the recall of these anxiety-arousing memories. For example, if something reminds a person of an unpleasant event, their mind may steer towards unrelated topics. This could induce forgetting without being generated by an intention to forget, making it a motivated action. There are two main classes of motivated forgetting: psychological repression is an unconscious act, while thought suppression is a conscious form of excluding thoughts and memories from awareness.

Refer to An Overview of Motivated Reasoning and Emotional Reasoning.

Brief History

Neurologist Jean-Martin Charcot was the first to do research into hysteria as a psychological disorder in the late 19th century. Sigmund Freud, Joseph Breuer, and Pierre Janet continued with the research that Charcot began on hysteria. These three psychologists determined that hysteria was an intense emotional reaction to some form of severe psychological disturbance, and they proposed that incest and other sexual traumas were the most likely cause of hysteria. The treatment that Freud, Breuer, and Pierre agreed upon was named the talking cure and was a method of encouraging patients to recover and discuss their painful memories. During this time, Janet created the term dissociation which is referred to as a lack of integration amongst various memories. He used dissociation to describe the way in which traumatising memories are stored separately from other memories.

The idea of motivated forgetting began with the philosopher Friedrich Nietzsche in 1894. Nietzsche and Sigmund Freud had similar views on the idea of repression of memories as a form of self-preservation. Nietzsche wrote that man must forget in able to move forward. He stated that this process is active, in that we forget specific events as a defence mechanism.

The publication of Freud’s famous paper, “The Aetiology of Hysteria”, in 1896 led to much controversy regarding the topic of these traumatic memories. Freud stated that neuroses were caused by repressed sexual memories, which suggested that incest and sexual abuse must be common throughout upper and middle class Europe. The psychological community did not accept Freud’s ideas, and years passed without further research on the topic.

It was during World War I and World War II that interest in memory disturbances was piqued again. During this time, many cases of memory loss appeared among war veterans, especially those who had experienced shell shock. Hypnosis and drugs became popular for the treatment of hysteria during the war. The term post traumatic stress disorder (PTSD) was introduced upon the appearance of similar cases of memory disturbances from veterans of the Korean War. Forgetting, or the inability to recall a portion of a traumatic event, was considered a key factor for the diagnosis of PTSD.

Ann Burgess and Lynda Holmstrom looked into trauma related memory loss in rape victims during the 1970s. This began a large outpouring of stories related to childhood sexual abuse. It took until 1980 to determine that memory loss due to all severe traumas was the same set of processes.

The False Memory Syndrome Foundation (FMSF) was created in 1992 as a response to the large number of memories claimed to be recovered. The FMSF was created to oppose the idea that memories could be recovered using specific techniques; instead, its members believed that the “memories” were actually confabulations created through the inappropriate use of techniques such as hypnosis.

Theories

There are many theories which are related to the process of motivated forgetting.

The main theory, the motivated forgetting theory, suggests that people forget things because they either do not want to remember them or for another particular reason. Painful and disturbing memories are made unconscious and very difficult to retrieve, but still remain in storage. Retrieval Suppression (the ability to utilise inhibitory control to prevent memories from being recalled into consciousness) is one way in which we are able to stop the retrieval of unpleasant memories using cognitive control. This theory was tested by Anderson and Green using the Think/No-Think paradigm.

The decay theory is another theory of forgetting which refers to the loss of memory over time. When information enters memory, neurons are activated. These memories are retained as long as the neurons remain active. Activation can be maintained through rehearsal or frequent recall. If activation is not maintained, the memory trace fades and decays. This usually occurs in short term memory. The decay theory is a controversial topic amongst modern psychologists. Bahrick and Hall disagree with the decay theory. They have claimed that people can remember algebra they learnt from school even years later. A refresher course brought their skill back to a high standard relatively quick. These findings suggest that there may be more to the theory of trace decay in human memory.

Another theory of motivated forgetting is interference theory, which posits that subsequent learning can interfere with and degrade a person’s memories. This theory was tested by giving participants ten nonsense syllables. Some of the participants then slept after viewing the syllables, while the other participants carried on their day as usual. The results of this experiment showed that people who stayed awake had a poor recall of the syllables, while the sleeping participants remembered the syllables better. This could have occurred due to the fact that the sleeping subjects had no interference during the experiment, while the other subjects did. There are two types of interference; proactive interference and retroactive interference. Proactive interference occurs when you are unable to learn a new task due to the interference of an old task that has already been learned. Research has been done to show that students who study similar subjects at the same time often experience interference. Retroactive interference occurs when you forget a previously learnt task due to the learning of a new task.

The Gestalt theory of forgetting, created by Gestalt psychology, suggests that memories are forgotten through distortion. This is also called false memory syndrome. This theory states that when memories lack detail, other information is put in to make the memory a whole. This leads to the incorrect recall of memories.

Criticisms

The term recovered memory, also known in some cases as a false memory, refers to the theory that some memories can be repressed by an individual and then later recovered. Recovered memories are often used as evidence in a case where the defendant is accused of either sexual or some other form of child abuse, and recently recovered a repressed memory of the abuse. This has created much controversy, and as the use of this form of evidence rises in the courts, the question has arisen as to whether or not recovered memories actually exist. In an effort to determine the factuality of false memories, several laboratories have developed paradigms in order to test whether or not false repressed memories could be purposefully implanted within a subject. As a result, the verbal paradigm was developed. This paradigm dictates that if someone is presented a number of words associated with a single non-presented word, then they are likely to falsely remember that word as presented.

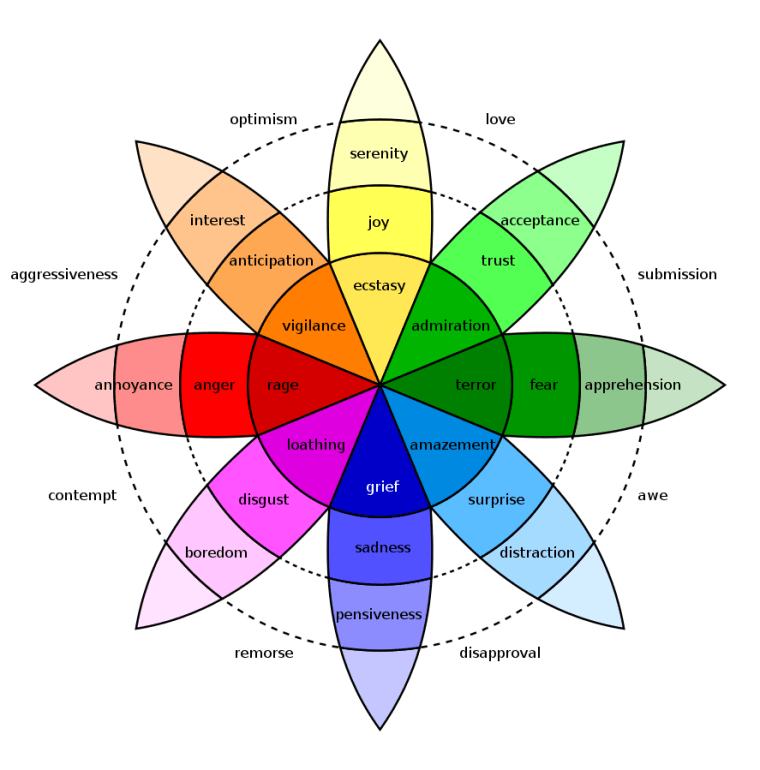

Similar to the verbal paradigm is fuzzy-trace theory, which dictates that one encodes two separate things about memory: the actual information itself and the semantic information surrounding it (or the gist). If we are given a series of semantic information surrounding a false event, such as time and location, then we are more likely to falsely remember an event as occurring. Tied to that is Source Monitoring Theory, which, among other things, dictates that emotionally salient events tend to increase the power of the memory that forms from said event. Emotion also weakens our ability to remember the source from the event. Source monitoring is centralised to the anterior cingulate cortex.

Repressed memory therapy has come under heavy criticism as it is said that it follows very similar techniques that are used to purposefully implant a memory in an adult. These include: asking questions on the gist of an event, creating imagery about said gist, and attempting to discover the event from there. This, when compounded with the fact that most repressed memories are emotionally salient, the likelihood of source confusion is high. One might assume that a child abuse case one heard about actually happened to one, remembering it with the imagery established through the therapy.

Repression

The idea of psychological repression was developed in 1915 as an automatic defensive mechanism based on Sigmund Freud’s psychoanalytic model in which people subconsciously push unpleasant or intolerable thoughts and feelings into their unconscious.

When situations or memories occur that we are unable to cope with, we push them away. It is a primary ego defence mechanism that many psychotherapists readily accept. There have been numerous studies which have supported the psychoanalytic theory that states that murder, childhood trauma and sexual abuse can be repressed for a period of time and then recovered in therapy.

Repressed memories can influence behaviour unconsciously, manifesting themselves in our discussions, dreams, and emotional reactions. An example of repression would include a child who is abused by a parent, who later has no recollection of the events, but has trouble forming relationships. Freud suggested psychoanalysis as a treatment method for repressed memories. The goal of treatment was to bring repressed memories, fears and thoughts back to the conscious level of awareness.

Suppression

Thought suppression is referred to as the conscious and deliberate efforts to curtail one’s thoughts and memories. Suppression is goal-directed and it includes conscious strategies to forget, such as intentional context shifts. For example, if someone is thinking of unpleasant thoughts, ideas that are inappropriate at the moment, or images that may instigate unwanted behaviours, they may try to think of anything else but the unwanted thought in order to push the thought out of consciousness.

In order to suppress a thought, one must:

- Plan to suppress the thought; and

- Carry out that plan by suppressing all other manifestations of the thought, including the original plan.

Thought suppression seems to entail a state of knowing and not knowing all at once. It can be assumed that thought suppression is a difficult and even time consuming task. Even when thoughts are suppressed, they can return to consciousness with minimal prompting. This is why suppression has also been associated with obsessive-compulsive disorder.

Directed Forgetting

Suppression encompasses the term directed forgetting, also known as intentional forgetting. This term refers to forgetting which is initiated by a conscious goal to forget. Intentional forgetting is important at the individual level: suppressing an unpleasant memory of a trauma or a loss that is particularly painful.

The directed forgetting paradigm is a psychological term meaning that information can be forgotten upon instruction. There are two methods of the directed forgetting paradigm: item method and list method. In both methods, the participants are instructed to forget some items, the to-be-forgotten items and remember some items, the to-be-remembered items. The directed forgetting paradigm was originally conceived by Robert Bjork. The Bjork Learning and Forgetting Lab and members of the Cogfog group performed much important research using the paradigm in subsequent years.

In the item method of directed forgetting, participants are presented with a series of random to-be-remembered and to-be-forgotten items. After each item an instruction is given to the participant to either remember it, or forget it. After the study phase, when participants are told to remember or to forget subsets of the items, the participants are given a test of all the words presented. The participants were unaware that they would be tested on the to-be-forgotten items. The recall for the to-be-forgotten words are often significantly impaired compared to the to-be-remembered words. The directed forgetting effect has also been demonstrated on recognition tests. For this reason researchers believe that the item method affects episodic encoding.

In the list method procedure, the instructions to forget are given only after half of the list has been presented. These instructions are given once in the middle of the list, and once at the end of the list. The participants are told that the first list they had to study was just a practice list, and to focus their attention on the upcoming list. After the participants have conducted the study phase for the first list, a second list is presented. A final test is then given, sometimes for only the first list and other times for both lists. The participants are asked to remember all the words they studied. When participants are told they are able to forget the first list, they remember less in this list and remember more in the second list. List method directed forgetting demonstrates the ability to intentionally reduce memory retrieval. To support this theory, researchers did an experiment in which they asked participants to record 2 unique events that happened to them each day over a 5-day period in a journal. After these five days, the participants were asked to either remember or forget the events on these days. They were then asked to repeat the process for another five days, after which they were told to remember all the events in both weeks, regardless of earlier instructions. The participants that were part of the forget group had had worse recall for the first week compared to the second week.

There are two theories that can explain directed forgetting: retrieval inhibition hypothesis and context shift hypothesis.

The Retrieval Inhibition Hypothesis states that the instruction to forget the first list hinders memory of the list-one items. This hypothesis suggests that directed forgetting only reduces the retrieval of the unwanted memories, not causing permanent damage. If we intentionally forget items, they are difficult to recall but are recognized if the items are presented again.

The Context Shift Hypothesis suggests that the instructions to forget mentally separate the to-be-forgotten items. They are put into a different context from the second list. The subject’s mental context changes between the first and second list, but the context from the second list remains. This impairs the recall ability for the first list.

Psychogenic Amnesia

Motivated forgetting encompasses the term psychogenic amnesia which refers to the inability to remember past experiences of personal information, due to psychological factors rather than biological dysfunction or brain damage.

Psychogenic amnesia is not part of Freud’s theoretical framework. The memories still exist buried deeply in the mind, but could be resurfaced at any time on their own or from being exposed to a trigger in the person’s surroundings. Psychogenic amnesia is generally found in cases where there is a profound and surprising forgetting of chunks of one’s personal life, whereas motivated forgetting includes more day-to-day examples in which people forget unpleasant memories in a way that would not call for clinical evaluation.

Psychogenic Fugue

Psychogenic fugue, a form of psychogenic amnesia, is a DSM-IV Dissociative Disorder in which people forget their personal history, including who they are, for a period of hours to days following a trauma. A history of depression as well as stress, anxiety or head injury could lead to fugue states. When the person recovers they are able to remember their personal history, but they have amnesia for the events that took place during the fugue state.

Neurobiology

Motivated forgetting occurs as a result of activity that occurs within the prefrontal cortex. This was discovered by testing subjects while taking a functional MRI of their brain. The prefrontal cortex is made up of the anterior cingulate cortex, the intraparietal sulcus, the dorsolateral prefrontal cortex, and the ventrolateral prefrontal cortex. These areas are also associated with stopping unwanted actions, which confirms the hypothesis that the suppression of unwanted memories and actions follow a similar inhibitory process. These regions are also known to have executive functions within the brain.

The anterior cingulate cortex has functions linked to motivation and emotion. The intraparietal sulcus possesses functions that include coordination between perception and motor activities, visual attention, symbolic numerical processing, visuospatial working memory, and determining the intent in the actions of other organisms. The dorsolateral prefrontal cortex plans complex cognitive activities and processes decision making.

The other key brain structure involved in motivated forgetting is the hippocampus, which is responsible for the formation and recollection of memories. When the process of motivated forgetting is engaged, meaning that we actively attempt to suppress our unwanted memories, the prefrontal cortex exhibits higher activity than baseline, while suppressing hippocampal activity at the same time. It has been proposed that the executive areas which control motivation and decision-making lessen the functioning of the hippocampus in order to stop the recollection of the selected memories that the subject has been motivated to forget.

Examples

War

Motivated forgetting has been a crucial aspect of psychological study relating to such traumatising experiences as rape, torture, war, natural disasters, and homicide. Some of the earliest documented cases of memory suppression and repression relate to veterans of the Second World War. The number of cases of motivated forgetting was high during war times, mainly due to factors associated with the difficulties of trench life, injury, and shell shock. At the time that many of these cases were documented, there were limited medical resources to deal with many of these soldiers’ mental well-being. There was also a weaker understanding of the aspects of memory suppression and repression.

Case of a Soldier (1917)

The repression of memories was the prescribed treatment by many doctors and psychiatrists, and was deemed effective for the management of these memories. Unfortunately, many soldiers’ traumas were much too vivid and intense to be dealt with in this manner, as described in the journal of Dr. Rivers. One soldier, who entered the hospital after losing consciousness due to a shell explosion, is described as having a generally pleasant demeanour. This was disrupted by his sudden onsets of depression occurring approximately every 10 days. This intense depression, leading to suicidal feelings, rendered him unfit to return to war. It soon became apparent that these symptoms were due to the patient’s repressed thoughts and apprehensions about returning to war. Dr. Smith suggested that this patient face his thoughts and allow himself to deal with his feelings and anxieties. Although this caused the soldier to take on a significantly less cheery state, he only experienced one more minor bout of depression.

Abuse

Many cases of motivated forgetting have been reported in regards to recovered memories of childhood abuse. Many cases of abuse, particularly those performed by relatives or figures of authority, can lead to memory suppression and repression of varying amounts of time. One study indicates that 31% of abuse victims were aware of at least some forgetting of their abuse and a collaboration of seven studies has shown that one eighth to one quarter of abuse victims have periods of complete unawareness (amnesia) of the incident or series of events. There are many factors associated with forgetting abuse including: younger age at onset, threats/intense emotions, more types of abuse, and increased number of abusers. Cued recovery has been shown in 90% of cases, usually with one specific event triggering the memory. For example, the return of incest memories have been shown to be brought on by television programs about incest, the death of the perpetrator, the abuse of the subject’s own child, and seeing the site of abuse. In a study by Herman and Schatzow, confirming evidence was found for the same proportion of individuals with continuous memories of abuse as those individuals who had recovered memories. 74% of cases from each group were confirmed. Cases of Mary de Vries and Claudia show examples of confirmed recovered memories of sexual abuse.

Legal Controversy

Motivated forgetting and repressed memories have become a very controversial issue within the court system. Courts are currently dealing with historical cases, in particular a relatively new phenomenon known as historic child sexual abuse (HCSA). HCSA refers to allegations of child abuse having occurred several years prior to the time at which they are being prosecuted.

Unlike most American states, Canada, the United Kingdom, Australia and New Zealand have no statute of limitations to limit the prosecution of historical offenses. Therefore, legal decision-makers in each case need to evaluate the credibility of allegations that may go back many years. It is nearly impossible to provide evidence for many of these historical abuse cases. It is therefore extremely important to consider the credibility of the witness and accused in making a decision regarding guiltiness of the defendant.

One of the main arguments against the credibility of historical allegations, involving the retrieval of repressed memories, is found in false memory syndrome. False memory syndrome claims that through therapy and the use of suggestive techniques, clients mistakenly come to believe that they were sexually abused as children.

In the United States, the statute of limitations requires that legal action be taken within three to five years of the incident of interest. Exceptions are made for minors, where the child has until they reach eighteen years of age.

There are many factors related to the age at which child abuse cases may be presented. These include bribes, threats, dependency on the abuser, and ignorance of the child to their state of harm. All of these factors may lead a person, who has been harmed, to require more time to present their case. As well as seen in the case below of Jane Doe and Jane Roe, time may be required if memories of the abuse have been repressed or suppressed. In 1981, the statute was adjusted to make exceptions for those individuals who were not consciously aware that their situation was harmful. This rule was called the discovery rule. This rule is to be used by the court as deemed necessary by the Judge of that case.

Psychogenic Amnesia

Severe cases of trauma may lead to psychogenic amnesia, or the loss of all memories occurring around the event.

This page is based on the copyrighted Wikipedia article < https://en.wikipedia.org/wiki/Motivated_forgetting >; it is used under the Creative Commons Attribution-ShareAlike 3.0 Unported License (CC-BY-SA). You may redistribute it, verbatim or modified, providing that you comply with the terms of the CC-BY-SA.

You must be logged in to post a comment.